Automatic Extraction: Better web data with less hassle

Getting hold of clean, accurate web data – quickly, and in a format that’s easy to manage – is a struggle for many organizations.

Automatic extraction was unthinkable until recently, because of the multiple variables that need to be considered.

Let’s imagine you need to get product names or prices from an e-commerce marketplace for comparison purposes. Web scraping traditionally means identifying product pages on a site and then extracting relevant information from those pages.

To achieve this your developer needs to inspect the site’s source code, and then write some more code to pick out the relevant bits like links, product names, and prices.

There are some excellent tools out there to do this, including CSS and XPath selectors or a free open source framework like Scrapy.

Finding answers fast

One method is hiring a couple of enthusiastic interns to copy and paste the information you’re looking for, but they’ll soon be struggling on larger-scale projects.

Alternatively, you might use commercially available automatic extraction software or scraping apps.

Or if you’re feeling brave you could try writing your own script for web scraping.

But what if you had a solution that could do it for you automatically? What if you could get usable data quickly and efficiently, to help drive important decision-making for your business?

In this article, we’ll look at automatic data extraction, how it works, and how automatic extraction can get you better web data, minus the hassle.

It's automatic

At Zyte, we believe every business should be able to access the web data it needs, quickly and cost-effectively.

Web scraping, or data extraction, is one of the most useful tools for businesses to grow and stay ahead of the competition.

That is why we designed Zyte’s Automatic Extraction API — a comprehensive solution that does just that.

Automatic Extraction Key features:

Extract structured data from web pages easily

No need to write site-specific code. Just feed URLs of individual pages you want to scrape, and the API serves up your requested data in a standard schema.

Powerful AI

Automatic Extraction harnesses deep learning methods, helping you to retrieve clean, accurate data in seconds rather than days or weeks. And it supports more than 40 languages, making it easy to scrape web data from literally all over the world.

Reliable and adaptive

Your own data extractor scripts can break if a web page changes, but Automatic Extraction reliably gets the data you want, even from dynamic sites. It’s a huge time saver, taking away the pain of having to maintain your own code.

Easy to use

Our friendly self-serve interface lets you convert whole websites or specific pages to datasets, in just a few clicks. Our API also makes it possible to get data from many different web page types.

All-in-all, the Automatic Extraction API facilitates the extraction of structured data from web pages without having to write site-specific code.

Benefits of automatic data extraction

- Accurate data — lower risk of errors

- Scalability — useful for large businesses or smaller enterprises looking to scale up operations

- Efficiency — quick processing saves you time and cost

Your own data extractor scripts can break if a web page changes, but Automatic Extraction reliably gets the data you want… even from dynamic sites. It’s a huge time saver, taking away the pain of having to maintain your own code.

Our API also makes it possible to get data from many different web page types. Say you’re building a comparison tool, allowing your customers to browse prices and availability for high street fashions or automotive parts across lots of different sources.

Automatic extraction makes it easy to get reliable data aggregated from e-commerce sites, news articles, blogs and more.

Do it yourself

At Zyte we always want to go one better. And now we’re excited to introduce our friendly self-serve interface for Automatic Extraction, letting you convert whole websites or specific pages to datasets in just a few clicks.

Wouldn’t it be fantastic if you could focus on profitable business activities instead of writing spiders to collect all those relevant page URLs?

Automatic Extraction neatly meets this need, handling both extraction and crawling without manual intervention.

It’s effectively a spidering service based on the same AI/machine learning technology used in our API. And it also features built-in ban management, using automatic IP rotation to prevent blocking so you don’t need to babysit every crawl.

How to use Zyte Automatic Extraction

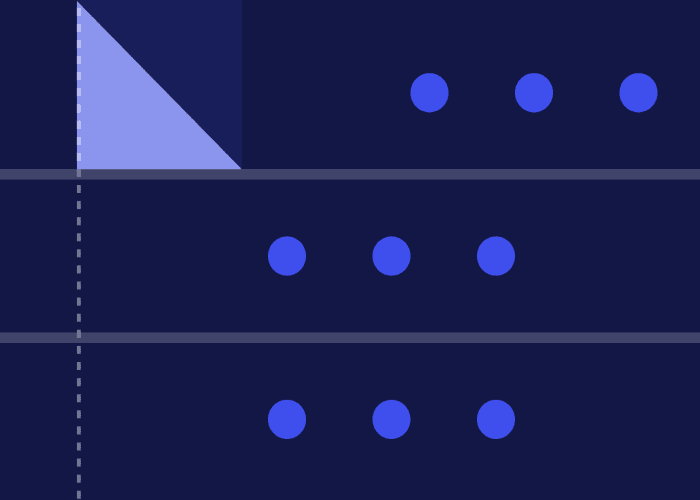

3 simple steps to get data using Zyte’s Automatic Extraction API:

- Select the data type you want to extract and enter your URL.

- Let Automatic Extraction do its thing. You can even leave it running while you attend other meetings.

- Fetch your clean, usable data. That’s all there is to it!

Automatic Extraction API currently supports 10 types of data, including news and article data, product data extraction, review extraction, and more.

Leverage the power of automatic extraction

So how do we do it?

Zyte’s Automatic Extraction API operates efficiently thanks to a powerful AI/machine learning model.

It ‘sees’ a web page much as a human does, with a screenshot of the entire page and its visual elements as they’re rendered by an actual browser.

These inputs are combined with the source code and processed by a deep neural network, allowing the model to understand relationships between different elements on a page. Trained on a wide range of different domains, the model also understands the inner structure of a page much as a web developer would.

Our downloading and rendering backend powers all Automatic Extraction requests, using modern browser engines and refined ban avoidance techniques.

This significantly reduces problems like banning and poor rendering — improving the quality of extraction results, especially for product pages.

That’s not all — there’s also smart, automated crawling

Our Automatic Extraction API has helped our clients get data far faster than manual extraction—but it still means pulling some developer resources away from other tasks.

Let’s say you want to extract products from the 'Arts and Crafts' category of an online marketplace. You’ll be faced with creating a list of all the URLs you wish to scrape. And that means writing code to crawl the web and collect individual page URLs for feeding into our API for extraction.

Our built-in smart automated crawler capability can help. It’s easy to use, letting you get the data you want without any coding.

How it works

- Access the automated crawler - via the ‘Datasets’ tab on the left-side navigation.

- Select the data type you want - customization options are available if you need them.

- Extraction Strategy lets you collect all products from a website (Full Extraction) - or fetch products within a particular category starting from the specified page (Category Extraction).

- You can tweak extraction request limits - a low limit lets you experiment without worrying about running out of credits, while larger limits enable scalability and production crawls.

- Select how you want to receive your data - by setting up S3 export or skip this option and select built-in JSON export. Now you’re all ready to start getting data.

- Hit ‘Start’ and you’ll see products start appearing in seconds - there’s a preview of items you’re about to extract, plus crawl statistics with a number of used requests from your specified limit, crawl speed in requests per minute and field coverage.

This friendly web interface doesn’t displace our original API that lets developers seamlessly integrate Automatic Extraction into their own applications. And while fully automated crawling is super-easy, the API lets you deploy refined custom crawling strategies tailored to your own specific business needs.

New backend, better quality data, and automatic extraction

We switched to a new downloading and rendering backend that powers all Automatic Extraction requests, using modern browser engines and refined ban avoidance techniques.

This has significantly reduced problems like banning and poor rendering, improving the quality of extraction results, especially for product pages.

Conclusion

Data extraction can be a complicated process, what with the multitude of tools and processes available — but it doesn’t have to be.

With the right automatic extraction solution, you’ll get quick and accurate data in no time, allowing you to make better decisions on how you want to improve your products and services, and grow your business.

Try Automatic Extraction for free, or talk to our experts to discover custom data extraction solutions that work for your business.