Did you know that 90% of startups will fail? It’s a sobering statistic that hangs over every founder. The primary reason for this high failure rate isn’t a lack of ideas or passion; it’s an inability to return a profit.

That challenge is particularly acute in the world of web scraping. Many of us in this field began with a simple script—a clever piece of code to solve a specific data extraction problem. But there's a big difference between writing a script and building a scalable, profitable business. Most web scraping projects, unfortunately, never make the leap.

Why is this so common? Because the business of web scraping is an iceberg. On the surface, it seems straightforward: extract data, deliver it, get paid. But beneath the surface lie immense, often untracked, hidden costs that steadily erode profitability. These include:

High cost of proxies: A necessary evil for any large-scale operation.

High maintenance & support costs: Websites change, scrapers break, and clients need help.

Compliance costs: Navigating the legal landscape of data extraction is complex and critical.

R&D costs: Staying ahead of anti-bot technologies requires constant innovation.

So, how do you go from a scripting side hustle to a real, profitable business?

What a web scraping business can look like

First, it’s important to understand that a "web scraping business" can take many forms. The model you choose will fundamentally shape your cost structure and path to profitability.

Traditional service business

This is the most straightforward model. It's project-based and labor-intensive, relying on building custom scraping jobs for individual clients. You are essentially trading time for money.

Managed service

An evolution of the service model, this involves recurring contracts with higher-value clients. It requires significant operational effort to maintain scrapers and support clients continuously.

Platform (SaaS)

This model focuses on building scalable, self-service tools that enable users to configure and run scraping tasks themselves. It balances automation with user flexibility.

Product (data marketplaces)

Here, the focus shifts to creating reusable, pre-packaged datasets sold on marketplaces or directly to customers. This model allows for higher margins through scalable, one-to-many data products.

Internal function

Many companies embed scraping capabilities inside another business function, like sales intelligence or dynamic pricing. While not a standalone business, it still faces the same profitability challenges.

Whether you're founding a new company or managing an internal data team, understanding the economics of your chosen model is the first step toward building a sustainable operation.

Unpacking the unit economics: Where the money really goes

To build a profitable business, you must get granular with your numbers. The most critical metric for any web scraping operation is the Cost of Goods Sold (COGS). This represents every dollar you spend to deliver your final product or service to the customer.

It's crucial to distinguish between COGS and other operational expenses.

COGS: Production environments, proxies, customer support, engineering (DevOps & maintenance), and tools directly used for production (CAPTCHA management, proxy APIs, browser automation).

Operational expenses: R&D, sales and marketing, general business administration, and internal or development tools.

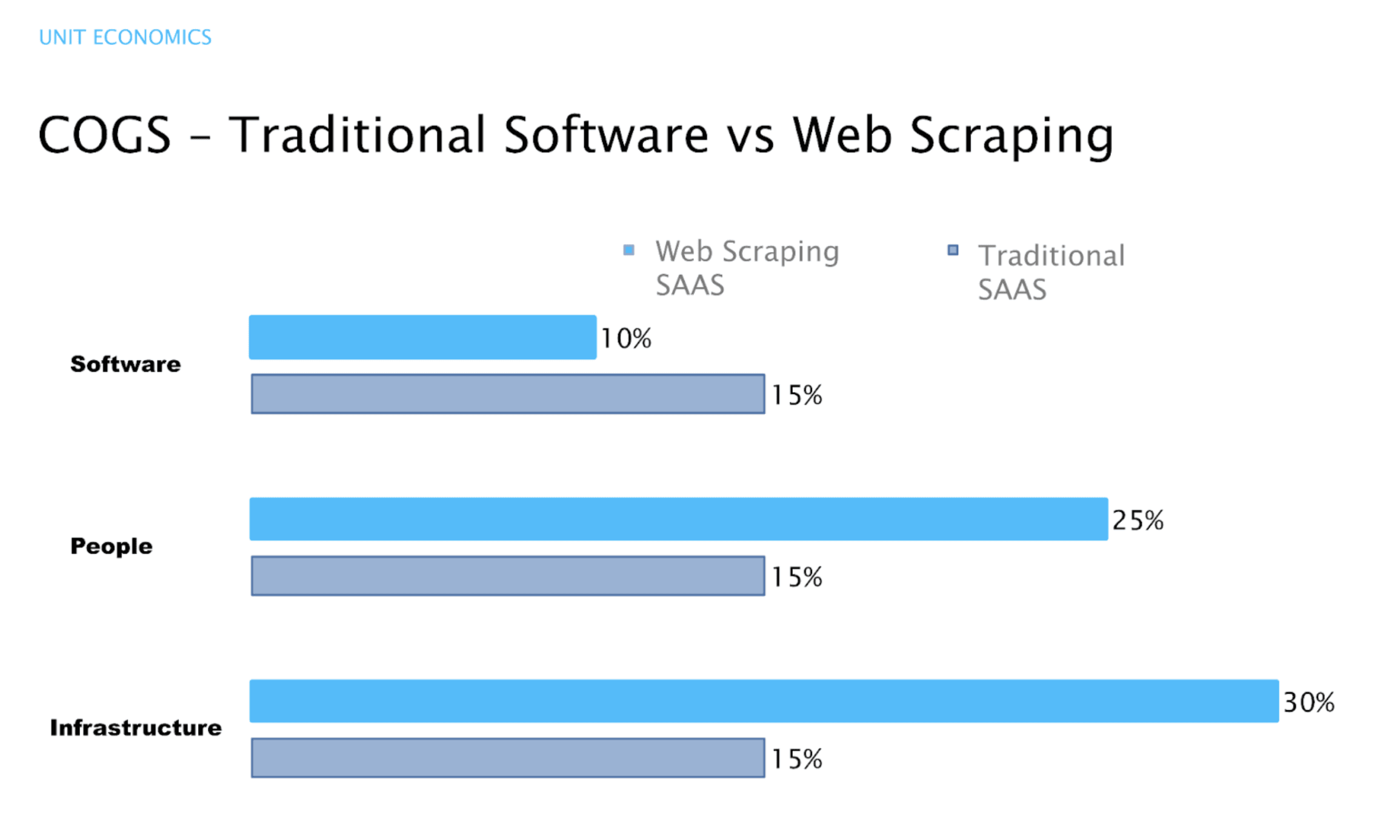

When you compare the COGS of a web scraping business to that of a traditional SaaS company, the differences are stark.

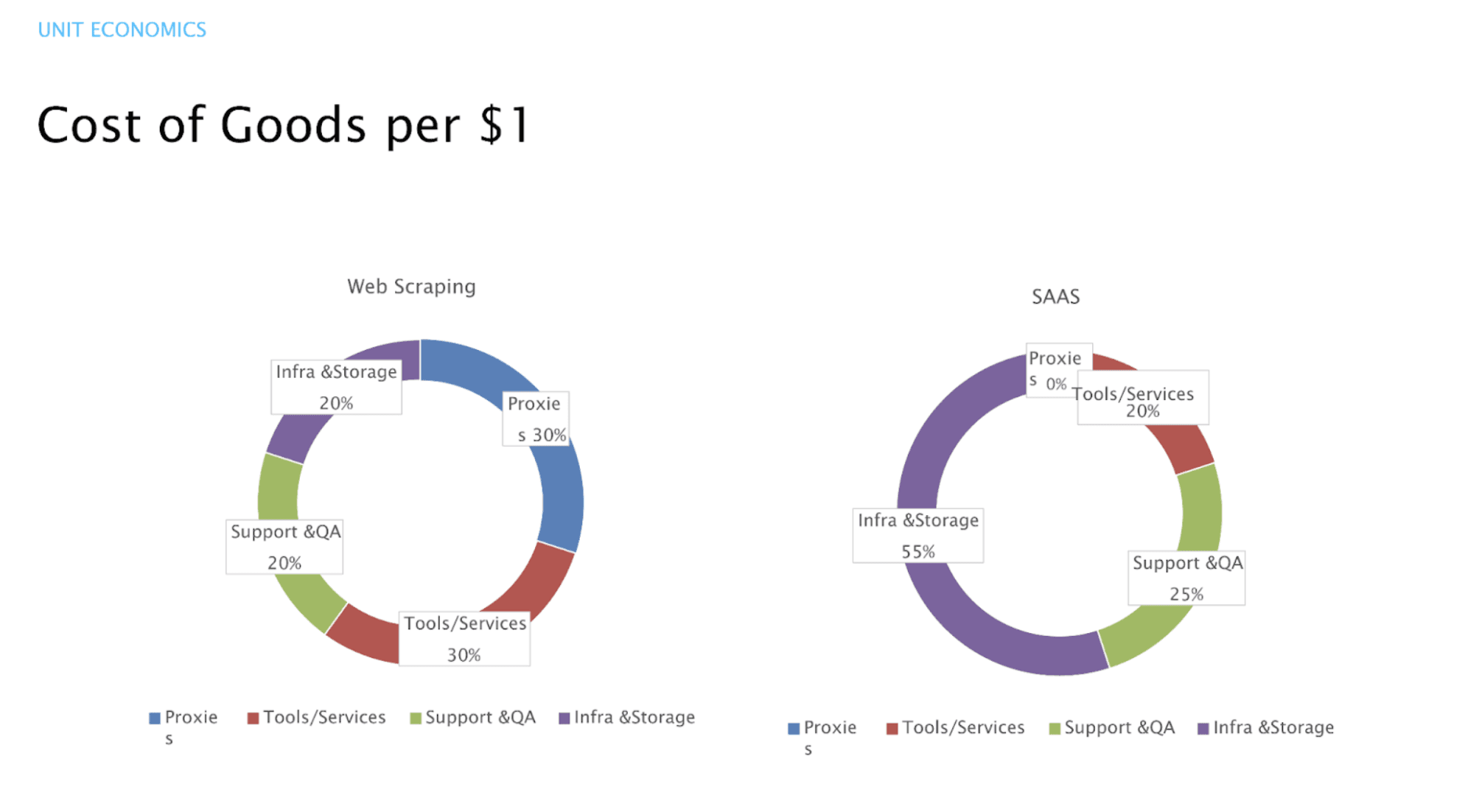

For every $1 of revenue, a typical SaaS business might spend 55% of its COGS on infrastructure and storage, 25% on support, and 20% on tools. Critically, their proxy cost is 0%.

A web scraping business, however, looks very different. Proxies can easily consume 30% of your COGS. Tools and specialized services can take another 30%.

A shifting landscape: New players and market dynamics

Suddenly, your infrastructure and support budgets are squeezed, accounting for only 20% each. Right out of the gate, our core business model comes with a significant, inherent cost that traditional software companies simply don't have.

This leads to a fundamental difference in margins. While a traditional SaaS business can achieve gross margins of 75-80% or more, a web scraping business often struggles to get out of the 50-60% range.

So, what does a "good" web scraping business look like from a numbers perspective? Based on industry benchmarks and our own experience, these are the targets you should be aiming for:

LTV to CAC Ratio

3:1

R&D Spend

~30% of revenue

Net Revenue Retention (NRR)

~110%

Customer Acquisition Cost (CAC)

~35%

Net Profit

~20%

Achieving these numbers isn't easy, but it is possible. It requires a deliberate, multi-step strategy.

The path to SaaS-like margins

Moving from a low-margin service to a high-margin, scalable business is a journey. Here is a three-step path we've followed to achieve SaaS-like margins.

Step 1: Get to 58% gross margin

When you’re just starting, your margins will likely be low. Your operation will be heavy on manual support, and you’ll be figuring out the most efficient way to manage your costs. The primary focus at this stage is simple: get a handle on your proxy costs and streamline your manual support processes as much as possible.

Step 2: Reach 65% gross margin

To move to the next level, you need to start investing in efficiency. This means:

Invest in R&D: Develop smarter crawlers that are more resilient to website changes and more efficient in their requests.

Automate Support: Build internal tools and customer-facing documentation to reduce the manual cost of support.

Optimize Proxy Usage: Implement intelligent proxy rotation and allocation to get the most value out of every dollar you spend on proxies.

Step 3: Target 75%+ gross margin

This is where you truly start to scale and achieve high profitability. The levers here are about transforming your business model:

Productize services: Identify your most common custom scraping jobs and turn them into repeatable, packaged feeds or products that can be sold to multiple customers.

Create data products: Move beyond raw data delivery and create higher-margin data products with built-in intelligence and analytics.

Strengthen customer success: Focus on making your customers successful. A successful customer will not only stay with you (low churn) but will also want more data sources and higher frequency, leading to high upsell and a strong Net Revenue Retention (NRR).

The future is now: What you can do tomorrow

The good news is that technology, particularly artificial intelligence, is providing new levers to pull in our quest for profitability. AI can be applied across the business to drive down costs and create better products:

Cost reductions: AI models can create smarter crawlers that auto-adjust to anti-bot changes, reducing failed requests and lowering proxy spend. AI can also be used for intelligent resource allocation, predicting which proxy or browser to use for a given task to optimize consumption.

Better products: Instead of just delivering raw data, you can use AI to provide curated intelligence, automated insights, and even predictive models (e.g., price prediction, trend forecasting), which command higher margins.

Smarter go-to-market: AI-powered sales and marketing tools can automate lead qualification, create personalized campaigns, and lower your Customer Acquisition Cost (CAC).

Building a profitable web scraping business is a marathon, not a sprint. It requires discipline, a deep understanding of your numbers, and a constant drive for efficiency.

Here are four things you can do tomorrow to start improving your profitability:

Audit your COGS. Break down your costs for proxies, infrastructure, and support. Ask yourself: Where is the single biggest lever I can pull to reduce costs?

Test AI in one workflow. Don't try to boil the ocean. Pick one area—QA, proxy allocation, or even lead generation—and run a small pilot with an AI tool to measure its impact.

Identify one service to productize. Look at your custom jobs. Is there one that is repeatable? Start the process of turning it into a scalable data product or packaged feed.

Double-down on customer success. The easiest way to grow revenue is from your existing customers. Map out how automation and better service can improve your customer retention and increase your Net Revenue Retention (NRR).

The path is challenging, but by knowing your costs, understanding your margins, and making strategic investments in R&D and productization, you can build a truly profitable and scalable web scraping business.