A crucial tool for any web scraping team

Web data extraction is a powerful practice for developers, enabling the collection of valuable data from websites for a variety of use cases. However, it's not just about sending requests and collecting data—there's a strategic layer that involves simulating organic user behavior in order to avoid detection and collect the data you need. Managing proxies and handling website bans are perhaps the biggest time suck for data teams, which is why today we’re bringing you the magic of Sessions!

What exactly is a session, and why is it so crucial in web scraping projects?

Watch this short video I made about using Sessions in web scraping 👇👇👇

What is a Session?

In the context of web scraping, a session is a set of request conditions—such as an IP address, cookies, and network stack—that makes multiple requests appear as part of an organic browsing session. Essentially, sessions help maintain the state of a user’s interaction with a website across multiple requests. This is particularly important when dealing with websites that require user authentication or have user-specific data.

Maintaining State

One of the most significant advantages of using sessions is their ability to maintain state. Many websites require users to log in before accessing certain content or performing specific actions. Without sessions, each request made during the scraping process would be treated as if it’s coming from a new, unauthenticated user. This means that you'd need to log in repeatedly, making the process inefficient and prone to errors.

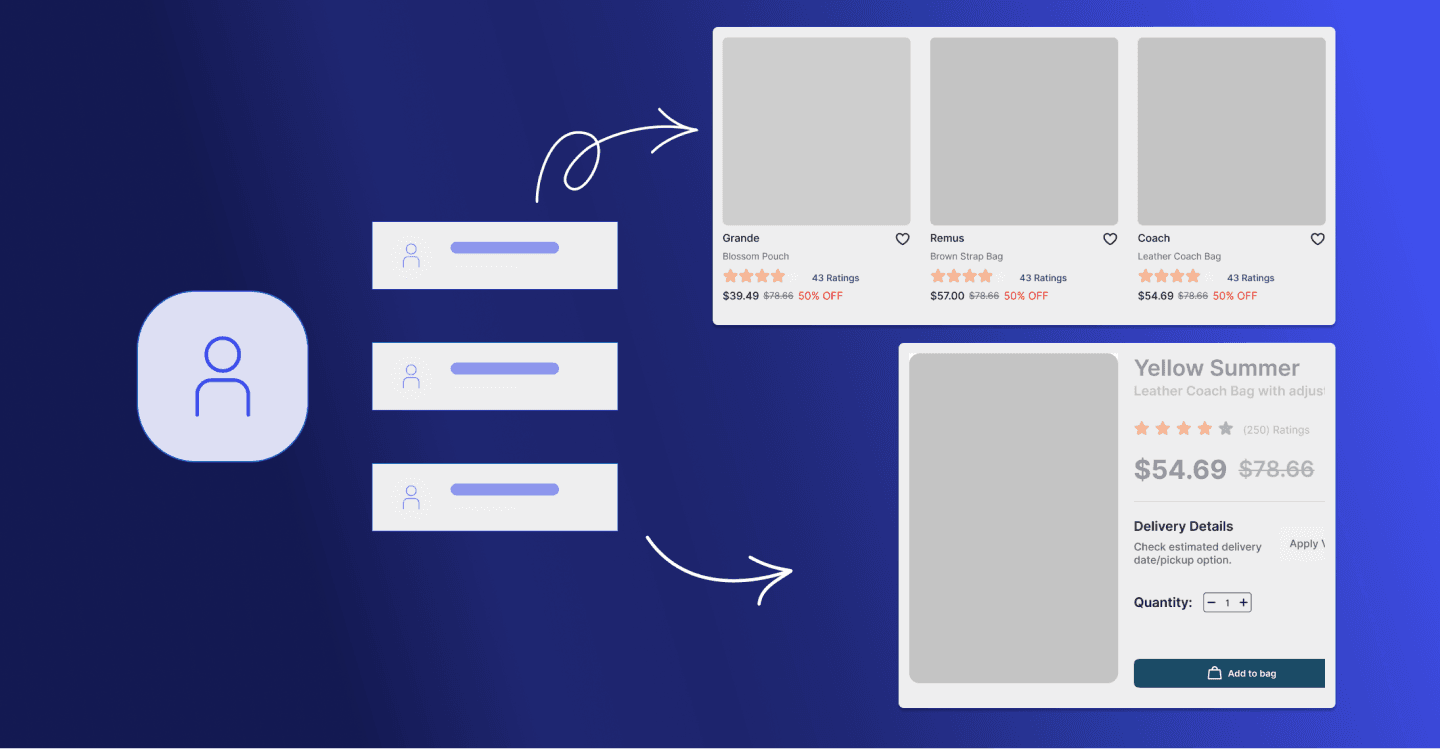

By using sessions, you can stay logged in across multiple requests, ensuring continuous access to restricted content. For example, if you're scraping an e-commerce website, sessions allow you to maintain the logged-in state while retrieving order history, wishlists, or personalized recommendations. This continuity is essential for accessing user-specific data without interruptions.

Handling Multi-Page Forms

Sessions also play a critical role in handling multi-page forms. Many websites, especially e-commerce platforms, use multi-page forms for checkout processes or user registrations. Scraping these forms without sessions can be problematic, as each step may require data from the previous one, and losing this continuity could lead to errors or incomplete data collection.

With sessions, you can ensure that the state of the form is preserved across different pages, allowing you to scrape all the necessary information without manual intervention. This seamless navigation through multi-step processes makes sessions indispensable in complex scraping tasks.

Boosting Efficiency

Efficiency is another critical factor where sessions make a difference. Establishing a new connection for every request can be inefficient and resource-intensive. Sessions allow you to reuse existing connections and associated data, such as cookies and headers, resulting in faster and more efficient scraping. This is particularly important when dealing with large volumes of data or when scraping websites with rate limits or anti-scraping measures in place.

By reusing connections and data, sessions help reduce overhead and speed up your scraping process, enabling you to collect more data in less time. This is especially useful when scraping extensive product listings or user-specific pages.

Bypassing Anti-Scraping Measures

Lastly, sessions are essential for bypassing anti-scraping measures. Many websites implement sophisticated techniques to detect and block scraping bots. By making your requests appear as part of an organic browsing session, sessions help you simulate organic behavior, reducing the risk of being banned.

In conclusion, sessions are a critical tool in any web scraper’s arsenal. They help maintain state, handle cookies, boost efficiency, and bypass anti-scraping measures, making your web scraping projects more robust and effective. Whether you're scraping a simple webpage or navigating a complex multi-step process, leveraging sessions is key to ensuring success.