Scrapy tips from the pros: part 1

Scrapy is at the heart of Zyte (formerly Zyte). We use this framework extensively and have accumulated a wide range of shortcuts to get around common problems. We’re launching a series to share these Scrapy tips with you so that you can get the most out of your daily workflow. Each post will feature two to three tips, so stay tuned.

Use Extruct to Extract Microdata from Websites

I am sure each and every developer of web crawlers has had a reason to curse web developers who use messy layouts for their websites. Websites with no semantic markups and especially those based on HTML tables are the absolute worst. These types of websites make scraping much harder because there are little-to-no clues about what each element means. Sometimes you even have to trust that the order of the elements on each page will remain the same to grab the data you need.

Which is why we are so grateful for Schema.org, a collaborative effort to bring semantic markup to the web. This project provides web developers with schemas to represent a range of different objects in their websites, including Person, Product, and Review, using any metadata format like Microdata, RDFa, JSON-LD, etc. It makes the job of search engines easier because they can extract useful information from websites without having to dig into the HTML structure of all the websites they crawl.

For example, AggregateRating is a schema used by online retailers to represent user ratings for their products. Here’s the markup that describes user ratings for a product in an online store using the Microdata format:

<div itemprop="aggregateRating" itemscope="" itemtype="http://schema.org/AggregateRating"> <meta itemprop="worstRating" content="1"> <meta itemprop="bestRating" content="5"> <div class="bbystars-small-yellow"> <div class="fill" style="width: 88%"></div> </div> <span itemprop="ratingValue" aria-label="4.4 out of 5 stars">4.4</span> <meta itemprop="reviewCount" content="305733"> </div>

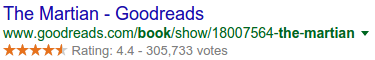

This way, search engines can show the ratings for a product alongside the URL in the search results, with no need to write specific spiders for each website:

You can also benefit from the semantic markup that some websites use. We recommend you to use Extruct, a library to extract embedded metadata from HTML documents. It parses the whole HTML and returns the microdata items in a python dictionary. Take a look at how we used it to extract microdata showing user ratings:

>>> from extruct.w3cmicrodata import MicrodataExtractor >>> mde = MicrodataExtractor() >>> data = mde.extract(html_content) >>> data { 'items': [ { 'type': 'http://schema.org/AggregateRating', 'properties': { 'reviewCount': '305733', 'bestRating': '5', 'ratingValue': u'4.4', 'worstRating': '1' } } ] } >>> data['items'][0]['properties']['ratingValue'] u'4.4'

Now, let’s build a spider using Extruct to extract prices and ratings from the Apple products website. This website uses microdata to store information about the products listed. It uses this structure for each of the products:

<div itemtype="http://schema.org/Product" itemscope="itemscope"> <img src="/images/MLA02.jpg" itemprop="image" /> <a href="/shop/product/MLA02/magic-mouse-2?" itemprop="url"> <span itemprop="name">Magic Mouse 2</span> </a> <div class="as-pinwheel-info"> <div itemprop="offers" itemtype="http://schema.org/Offer" itemscope="itemscope"> <meta itemprop="priceCurrency" content="USD"> <span class="as-pinwheel-pricecurrent" itemprop="price"> $79.00 </span> </div> </div> </div>

With this setup, you don’t need to use XPath or CSS selectors to extract the data that you need. It is just a matter of using Extruct’s MicrodataExtractor in your spider:

import scrapy from extruct.w3cmicrodata import MicrodataExtractor class AppleSpider(scrapy.Spider): name = "apple" allowed_domains = ["apple.com"] start_urls = ( 'http://www.apple.com/shop/mac/mac-accessories', ) def parse(self, response): extractor = MicrodataExtractor() items = extractor.extract(response.body_as_unicode(), response.url)['items'] for item in items: if item.get('properties', {}).get('name'): properties = item['properties'] yield { 'name': properties['name'], 'price': properties['offers']['properties']['price'], 'url': properties['url'] }

This spider generates items like this:

{ "url": "http://www.apple.com/shop/product/MJ2R2/magic-trackpad-2?fnode=4c", "price": u"$129.00", "name": u"Magic Trackpad 2" }

So, when the site you’re scraping uses microdata to add semantic information to its contents, use Extruct. It is a much more robust solution than relying on a uniform page layout or wasting time investigating the HTML source.

Use js2xml to Scrape Data Embedded in JavaScript Code Snippets

Have you ever been frustrated by the differences between the web page rendered by your browser and the web page that Scrapy downloads? This is probably because some of the contents from that web page are not in the response that the server sends to you. Instead, they are generated in your browser through JavaScript code.

You can solve this issue by passing the requests to a JavaScript rendering service like Splash. Splash runs the JavaScript on the page and returns the final page structure for your spider to consume.

Splash was designed specifically for this purpose and integrates well with Scrapy. However, in some cases, all you need is something simple like the value of a variable from a JavaScript snippet, so using such a powerful tool would be overkill. This is where js2xml comes in. It is a library that converts JavaScript code into XML data.

For example, say an online retailer website loads the ratings of a product via JavaScript. Mixed in with the HTML lies a piece of JavaScript code like this:

<script type="text/javascript"> var totalReviewsValue = 32; var averageRating = 4.5; if(totalReviewsValue != 0){ events = "..."; } ... </script>

To extract the value of averageRating using js2xml, we first have to extract the <script> block and then use js2xml to convert the code into XML:

>>> js_code = response.xpath("//script[contains(., 'averageRating')]/text()").extract_first() >>> import js2xml >>> parsed_js = js2xml.parse(js_code) >>> print js2xml.pretty_print(parsed_js) <program> <var name="totalReviewsValue"> <number value="32"/> </var> <var name="averageRating"> <number value="4.5"/> </var> <if> <predicate> <binaryoperation operation="!="> <left><identifier name="totalReviewsValue"/></left> <right><number value="0"/></right> </binaryoperation> </predicate> <then> <block> <assign operator="="> <left><identifier name="events"/></left> <right><string>...</string></right> </assign> </block> </then> </if> </program>

Now, it is just a matter of building a Scrapy Selector and then using XPath to get the value that we want:

>>> js_sel = scrapy.Selector(_root=parsed_js) >>> js_sel.xpath("//program/var[@name='averageRating']/number/@value").extract_first() u'4.5'

While you could probably write a regular expression with the speed of thought to solve this problem, a JavaScript parser is more reliable. The example we used here is pretty simple, but in more complex cases regular expressions can be a lot harder to maintain.

Use Functions from w3lib.url to Scrape Data from URLs

Sometimes the piece of data that you are interested in is not alone inside an HTML tag. Usually you need to get the values of some parameters from URLs listed in the page. For example, you might be interested in getting the values of ‘username’ parameters from URLs listed in the HTML:

<div class="users"> <ul> <li><a href="/users?username=johndoe23">John Doe</li> <li><a href="/users?active=0&username=the_jan">Jan Roe</li> … <li><a href="/users?active=1&username=janie&ref=b1946ac9249&gas=_ga=1.234.567">Janie Doe</li> </ul> </div>

You might be tempted to use your regex superpowers, but calm down, w3lib is here to save the day with a more dependable solution:

>>> from w3lib.url import url_query_parameter >>> url_query_parameter('/users?active=0&username=the_jan', 'username') 'the_jan'

If w3lib is new to you, take a look at the documentation. We’ll be covering some of the other features of this Python library later in our Scrapy Tips From the Pros series.

Wrap Up

Share how you used our tips in the comments below. If you have any suggestions on scenarios that you’d like covered or tips you’d like to see, let us know!

Make sure to follow us on Twitter, Facebook, and Instagram and don’t forget to subscribe to our RSS Feed. More tips are coming your way, so buckle up!